Course project in KAIST Mobile Computing and Applications course (CS442)

Project Summary

Mobile devices are utilized in various user contexts, which depend on the user's type and the specific situation. To optimize the user experience on mobile devices, the interaction method should be capable of recognizing and adapting to the user's context in real-time. Particularly, for common smartphones, the user's hand posture is a crucial element in the user context, given that touch is the predominant interaction method. In response to this need, we introduce GraspTracker, a system designed to track the user's hand posture in real-time using built-in sensors.

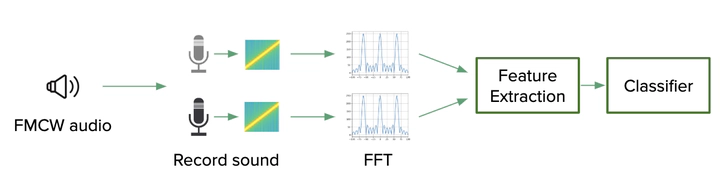

GraspTracker functions by generating a Frequency Modulated Continuous Wave (FMCW) audio signal from the smartphone’s earpiece speaker and recording the sound with two distinct microphones. Subsequently, for each recorded sound, a Fast Fourier Transform (FFT) is applied to obtain the frequency response and extract features from the results. Finally, GraspTracker classifies the user’s grasp posture based on the extracted features.

Libraries & Frameworks

- Arduino

- Python (scikit-learn)